This weekend I was trying a bit ComfyUI to see what is happening on the stable diffusion domain. Even though it looks like stable diffusion is no longer the best and greatest, it is still clearly an impressive tool to generate images. Most users seem to focus on using it to either create image from text, do style transfer, or plenty of uses cases that are trendy on Twitter and other social media.

One thing I wanted to try if it is possible to use the workflows to do photo editing, and in particular, RAW image editing. It turns out that there is no plugin that exist to directly load a RAW image and insert it into a ComfyUI workflow. Most of the internet mostly care about jpeg and png images, I'm guessing.

More generally it seems that most image generation models are not meant for RAW image editing, which would be a nice project to explore another day. Anyways, as a first step I decided to experiment with ComfyUI API and came up with the most minimal possible extension: ComfyUI Raw Image Loader.

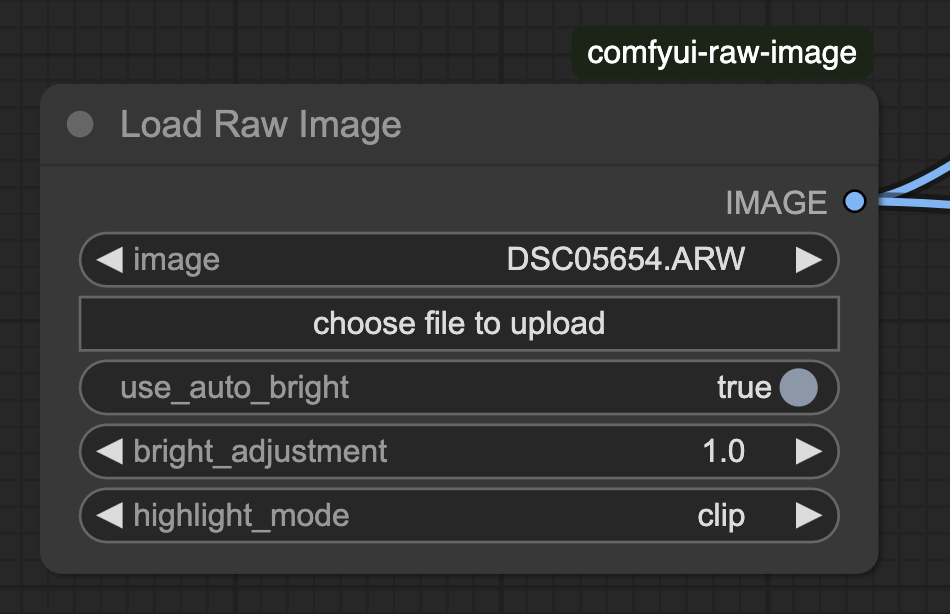

This extension provides a simple node to load a RAW image from disk and convert it to a proper Torch tensor (or simply an IMAGE in ComfyUI vocabulary) that can then be used as input for any image-to-X workflow. I added a couple of features to allow brightness adjustment and how it should behave with brightness clipping. I was able to set it up quickly thanks to the amazing pyraw library.

The overall code is very simple, ComfyUI has a good documentation to create a new node, in short, the code can be summarized to:

- Create a Python class

- Define the return and input types, with their constrains and validations

- Write a main function that takes those inputs and return the specified output

Here is a skeleton example of how to create a node extension:

class LoadRawImage:

# Define node interface metadata

RETURN_TYPES = ("IMAGE",) # What the node outputs

FUNCTION = "load_img" # Main function to call

CATEGORY = "image" # UI category

DESCRIPTION = "Load a RAW image into ComfyUI."

@classmethod

def INPUT_TYPES(cls):

# Define UI inputs (required and optional)

files = get_files_from_input_directory()

return {

"required": {"image": (files, {"image_upload": True})},

"optional": {

"use_auto_bright": ("BOOLEAN", {"default": True}),

"bright_adjustment": ("FLOAT", {"default": 1.0, "min": 0.1, "max": 3.0}),

"highlight_mode": (["clip", "blend"], {"default": "clip"})

}

}

@classmethod

def VALIDATE_INPUTS(cls, image):

# Validate inputs before processing

return True or error_message

@classmethod

def IS_CHANGED(cls, image):

# Track if inputs have changed (for caching)

return hash_of_input

def load_img(self, image, use_auto_bright=True, bright_adjustment=1.0, highlight_mode="clip"):

# Main processing function that matches FUNCTION name

# Process inputs and return outputs matching RETURN_TYPES

processed_image = process_raw_image(image, parameters...)

return (processed_image,) # Return as tuple matching RETURN_TYPES

# Register node(s) for ComfyUI to discover

NODE_CLASS_MAPPINGS = {"Load Raw Image": LoadRawImage}I wonder if ComfyUI explored the option to use Python type annotations instead of simple strings to define inputs and outputs. At least the string approach has the advantage of being simple and universal.

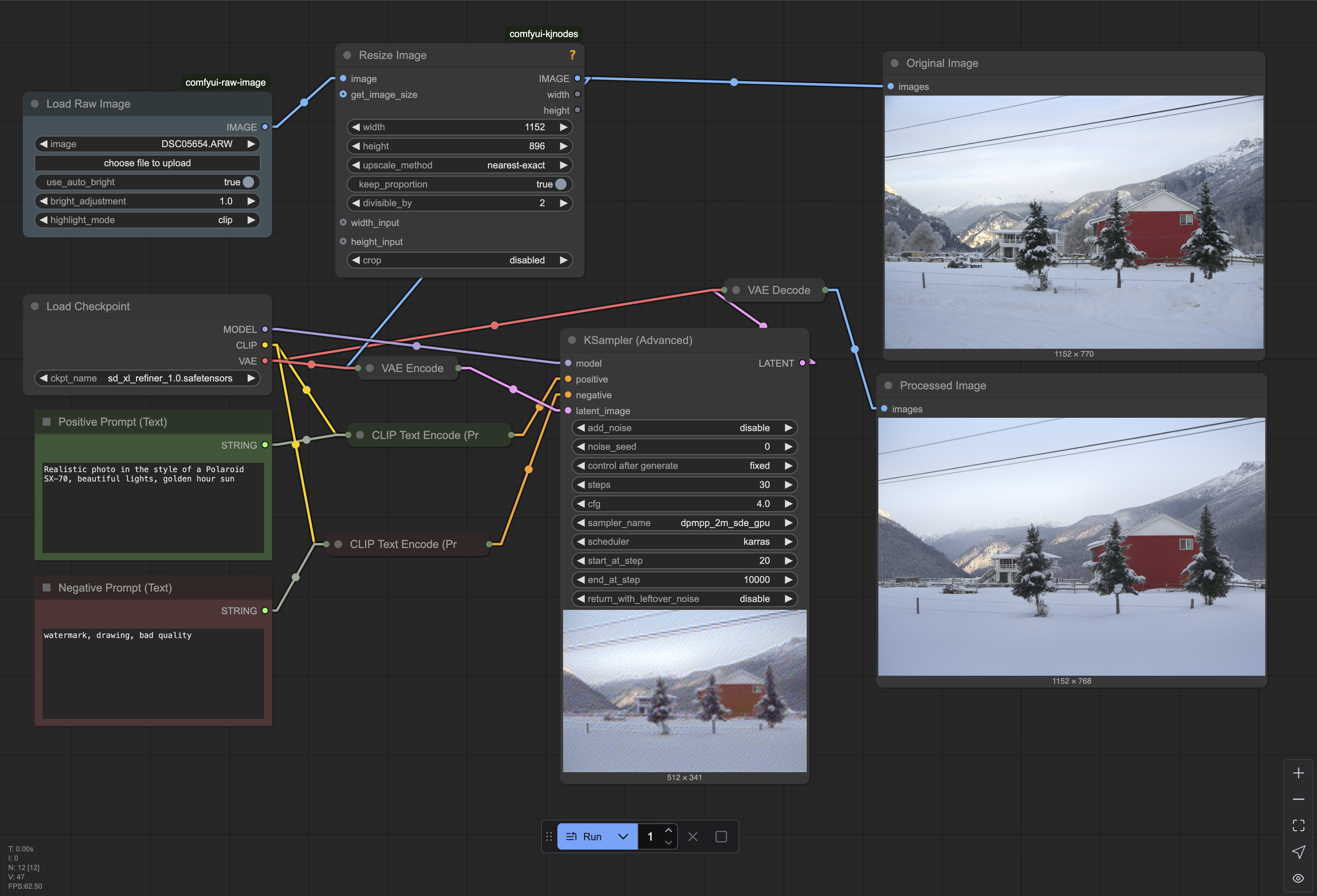

In the end, I created a simple SDXL workflow, using a refiner to change a little bit the style of an input picture. I'm experimenting a bit more to see how to generate more complex changes, and provide more control over the input image.

I do believe diffusion, LLMs and more generally deep learning tools, are good tools overall for people to be able to experiment too, and in a time where artists and photographers feel threaten by them, providing more tools for said people to leverage GenAI tools, will definitively be good to propagate knowledge.